Build Software By Trial-And-Error Or By Careful Planning?

“No matter who you are — a welder, philosopher, a guitarist or a president — you are in some sense simultaneously making the map of your…

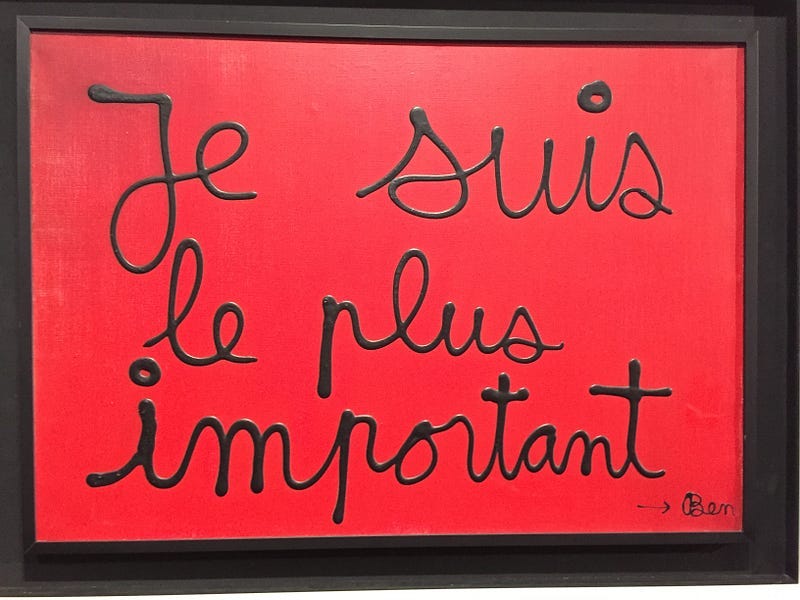

“No matter who you are — a welder, philosopher, a guitarist or a president — you are in some sense simultaneously making the map of your life and following it. It is not an exaggeration to say life itself is one long improvisation.” -Stephen T. Asma

Most people are good at figuring out how to get a simple task done. We can attribute this ability to common sense. Thanks to the presence of common sense, it doesn’t take long to train someone on a simple, repetitive task.

But employing humans to do simple repetitive tasks is not very prudent. People get tired on the job, and are then prone to make all kinds of silly mistakes. Also, people’s time is expensive, especially compared to the cost of machine’s time.

It is the above two reasons that are driving the software revolution. It should be quite easy to program machines to do simple repetitive tasks. We call that process automation. Replace expensive, error prone humans with programmable machines. It’s a win-win proposition.

There Is More Than One Way To Automate A Task

Speaking in broad terms, there are two ways to approach automating a task:

Automate by emulating an empirical evidence

Automate by providing a formalized function

The above division fits into two familiar categories: imperative and functional (declarative) programming. Although the two disciplines appear to be conflicting with each other, they actually go hand in hand. We may call imperative mode of programming ‘intuitive’. In contrast, we could call functional mode ‘knowledge-based’. These two are not pitted against each other. As Bill Evans said:

Intuition has to lead knowledge, but it can’t be out there alone.

Let’s look at the empirical approach first.

Observe, Listen, Act, Learn

Intuitive skills precede knowledge. Any time we find ourselves in an untamed environment, we lack knowledge necessary to adapt. An unfamiliar situation forces us to abandon our prior knowledge. When not knowing how to act appropriately, we resort to simulation.

How do we simulate? By observing, listening, then acting, then learning whether our simulation was successful. If it was successful, continue in that direction. If our simulation was a failure, cut our losses and pick another battle.

Rinse, repeat. Sounds familiar? Since you are into software (otherwise, why are you reading this?), you are probably now thinking of REPL (Read-Eval-Print Loop). Yes, REPL is an intuitive way of gaining knowledge about the unfamiliar territory. When following the REPL method, we are honouring the time tested empirical approach to gaining knowledge. We are making the map and immediately following it. We are progressing by utilizing the trial-and-error method.

Let’s now examine in what way is the non empirical, knowledge-based approach different.

Think Before You Act

Knowledge-based approach relies on tried-and-tested knowledge that spurns improvisation. There is a prescribed way of accomplishing something, which renders empirical, intuition-driven approach useless.

This approach expects us to slow down and measure twice, cut once. Instead of frantically cutting and testing whether the cut pieces fit, we are now supposed to measure first. But how do we know what to measure?

We need a specification, a blueprint. Who provides that blueprint?

We’ve seen that in the world of intuitive, empirical simulation, there is no blueprint. We are conjuring up that blueprint, that map, as we go. But in the world of prescribed, knowledge-based processing, the map is already pre-made. Someone hands us the map, the blueprint, and we are now supposed to devise a plan of action. Thus we are advised to think before we act.

Who Provides The Map?

In case when we are exploring an unknown territory, trial-and-error is the only possible way to go. Trial-and-error is risky business, as it does not guarantee future success. It prevents us from predicting when, if ever, will we reach our destination.

It is thus desirable (and advisable) to avail ourselves of some kind of a map. A blueprint of sorts, a specification. What is it that we are cutting? What is our destination, and how do we get there?

There are three possible sources from where the map could arrive:

Prior experience

Business analysis

Computer science

The business analysis source is all about the intention (i.e. what do we need to accomplish). The computer science source is all about the implementation (i.e. how are we to do it). Prior experience is about battle scars, and the ability to recall how to avoid those scars (what not to do and how not to do it). But prior experience is also about positive outcomes, and remembering what worked well in similar situations.

What To Do In The Absence Of Map?

We may not have access to a good source of business analysis (or it could be that the problem doesn’t need one). We also may not have access to prior experiences when exploring unfamiliar territory. Given the absence of a proper map, we now have two choices:

1. Rely on intuitive simulation (trial-and-error)

2. Rely on our formal training (i.e. computer science)

An example may help clarify things here: how to transform a document by replacing certain recurring pattern with another pattern? A simple enough challenge, that a programmer may tackle in more than one way.

Intuitive Simulation (Trial-And-Error)

One way to tackle the above challenge is to start doing the transformation by hand. Open the document under study and find the instances of the recurring pattern. Then type in the instance of the new, desired pattern. Keep an eye on how straightforward the manual process is. Are there any exceptions, or is everything very mechanical, very predictable?

To keep things simple (for now), suppose we conclude that the above exercise was straightforward. No variations, no exceptions, no gotchas. That would be the simplest possible use case, and it should be dead easy to automate. We could devise a global find-and-replace script.

To make things even simpler, let’s not write the entire script from ground up. Instead of programming our script to be able to access the document storage, find the document, open it, read it, etc., let’s leverage an existing app. For example, let’s use a popular text editor Emacs. We will create, in the Emacs parlance, a macro (basically a script).

The easiest way to create a macro in Emacs is to perform an operation by hand while recording it. To do that, open the document in Emacs and hit the Ctrl-x Ctrl-( key combination. That command will start the recording of all the keystrokes we perform.

Now hit Ctrl-a key combination to go to the start of the line. Next hit the Ctrl-space to set the mark. Then do Ctrl-s to search for the pattern you wish to replace. Hit Return to exit the search and then Ctrl-d to delete the found instance of the pattern. Now type the new pattern to replace the old one. Following that, type Ctrl-e to move to the end of the line. Finally, hit Ctrl-x ) key combination to end the recording of the macro.

We can store the script we have recorded, which will come in handy for future use. We have now created a computer program, a piece of software that automates manual labour. We can use this piece of software as many times as we wish and with it transform as many documents as needed. We can also share this piece of software with others, thus making it even more useful.

But realistically, how useful is this script we have produced in the above exercise? In all truthfulness, not much. It is only useful if any document needing transformation contains the exact pattern we’ve recorded. Also, it is quite unlikely that any useful transformation will be that straightforward. It is much more likely that there will be variations in the processing. For example, it could be that one variation of the pattern is necessary when found in the middle of a paragraph. A different variation of the same pattern must apply if it follows the subsection title. Many (countless?) other variations are possible, of course.

It’s easy to see that not every instance of automated processing is straightforward. Varying conditions may influence the end result. We have now encountered conditional processing logic (sometimes called branching logic).

It is impossible to script conditional processing logic by recording operator’s keystrokes. The only way to produce a script capable of processing the branching logic is to program it. Meaning, we need to roll up our sleeves and hand code that script.

Hand coding a script doesn’t mean that we have left the trial-and-error territory. We are simulating the actual processing that human operator may perform. We now must abandon our hopes of recording the manual processing. We must instead resort to programming it by instructing the computer what to do on our behalf.

While we’re staying in the trial-and-error territory, we are using the intuitive approach. For that, we always resort to the imperative mode of programming. We instruct the machine on how to process something by barking orders at it. Something like:

“First do this, then do that, then check if this is true and if yes, do this, else do that other thing”. And so on. It feels like babysitting the machine, handholding it during the processing. And that’s exactly what we’re doing.

Imperative programming languages by their design take those specific orders and execute them. Such languages have no opinion about the instructions they process. It is our responsibility to instruct the machine to do something and then verify if the results are correct. If the results are not what we were expecting, we proclaim our trial to be erroneous. We then work on correcting that error. This is why we call this approach to building software ‘trial-and-error’. We are building a map while we’re walking a treacherous territory. It can get a bit unnerving, to say the least.

Tune in for the upcoming Part 2 of the journey from trial-and-error to careful planning.