Computer Programming started with TDD… and is still stuck there!

What is computer programming? One could say it’s the process of creating a system that is capable of processing information.

What is computer programming? One could say it’s the process of creating a system that is capable of processing information.

OK, what is information then? According to Gregory Bateson, information is “any difference which makes a difference in some later event.”

In a nutshell, we’re talking about differences that make a significant signal to us. That’s what the act of programming computers seems to boil down to.

How did it all start?

First attempts at programming computers were messy. Basically, first computer programmers were crafting automated processing by wiring the machines manually.

Soon after the initial struggle, engineers have introduced punched cards:

Both manual rewiring the computers and feeding them punched cards were cumbersome and counter-intuitive. Still, punched cards offered a bit more legibility to the engineers.

How do we know that computer programs work?

One problem computer programming pioneers were faced with was how to confirm that the changes to the system (i.e., changes to the binary code installed in the computer) actually do work correctly?

To solve that problem, they came up with a simple, but at the same time quite brilliant idea. But before we describe that idea, let’s first examine some fundamental principles:

What is a computer? A computer is a machine capable of automated processing. It is capable of intake (i.e., can be fed some information), and once the intake happens, a computer can automatically process that information (i.e., transform some values).

OK, that’s nice, but what’s the use of that processing? If we feed some information (i.e., some values) to the computer, and the computer automatically processes those values by transforming them according to some rules defined by the programmers, the results of that process remain inside the big box (i.e., inside the big machine). How can that be of any use to us?

Well, similar to how computers are capable of intake, they’re also capable of producing outputs. Computers can spit out the transformed values.

Here is how the process works with punched cards: programmers prepare a blank punch card by poking (i.e., punching) holes in the card. Those holes would then represent some value(s). The card gets fed into the computer, the computer reads the value(s) and then transforms those by following the rules implemented in the machine code. Once the transformation of the input value(s) is completed, the computer takes another blank punch card and automatically punches it to represent the resulting value(s). It then ‘spits out’ (i.e., delivers) the resulting punched card. In a way, the computer almost works like a sausage machine.

OK, back to the initial question: how do we know that the delivered punched card contains correct value(s)? Well, we can sit down and examine the newly minted punched card to see if indeed it contains the expected value(s).

But that process is tedious and cumbersome, so the pioneering computer programmers came up with a much more elegant solution: they’d pre-punch the expected value(s) into a blank punch card. Then they’d run the program, and once the collect the punched card produced by the computer, they’d overlay that card with the pre-punched card. They’d then raise both cards against some source of light, and at that point it would be immediately visible whether both cards are punched in the identical places. If they are, we know that the program works as expected. If not, we have a failure and we need to fix it.

Ta-da! That’s how Test-Driven Development (TDD) was born!

In a nutshell, TDD is the process whereby we prepare the input values, the expected values, and once we run the system by inserting the input values and collect the actual values, we compare the actual values with the expected values. The test states that the actual and the expected values must match. If they do match, the test passes. If the values don’t match, the test fails.

It is nearly impossible to read punched cards

One problem, though. When we look at a punched card, it conveys zero useful information to us. Yes, properly designed machines can easily derive useful information from that punched card, but human engineers can’t. And if humans cannot understand the system, we have created a poorly engineered system.

So, the effort was underway to fix it. How did engineers go about fixing it? By reaching out to the already familiar technology. Basically, when it comes to processing information, the most familiar medium is language. And the most familiar formalized way to express information is text.

Text is the representation of the language. Instead of getting stuck with the obtuse visual representation of information (i.e., punched cards), computer programming practice switched to using text. Much more legible for humans.

How to insert text into a computer?

Early computers had no way to accept text. Engineers looked for a way to enable that functionality, and again reached out to what’s the most familiar mechanical way to enter text — typewriters.

Many educated humans were, at that time, already familiar with the mechanical typewriters with keyboards. So, adding the keyboard to the computer provided a familiar interface.

But was it prudent to do so? Well, history of engineering illustrates how every breakthrough always goes through an awkward period of half-baked solutions. Those solutions are enabling the functionality only halfway through. It usually takes another breakthrough to deliver a fully fleshed solution.

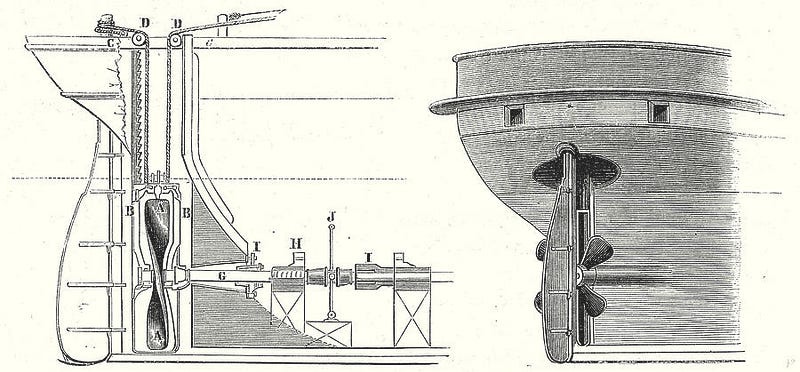

For example, steam engine. When steam engine was invented in 1698 by Thomas Savery (and later improved in 1712 by Thomas Newcomen), one of its first applied functions was to be used for drilling holes in the ground. Later, someone realized they could place the steam engine on a vehicle traveling along some tracks, and that’s how the first locomotive was born.

The locomotive pulling the train was so successful, that people realized that steam engines could also be used to power the boats. However, first implementation of the steam engine powered boats was hilarious — engineers basically made the steam engine move the oars:

It took a bit more ingenuity to come up with a better, more efficient solution — propeller!

At this point (in the year 2022), software engineering is still at the stage similar to using a steam engine to power the ores on a steamboat. The equivalent breakthrough (i.e., the breakthrough achieved by replacing the ores with the propeller) still hasn’t happened in the software industry. We are still waiting for some ingenious breakthrough that would replace the typewriter (the mechanical keyboard) with a more powerful, more meaningful way of interacting with the information processing machines.

Text easily conveys information

Replacing punched cards with text was a big step forward in the world of computer programming. Instead of squinting and striving to make heads or tails from a card that is punched in several places, computer programmers could suddenly express their intentions by writing text. They started using familiar keyboards for writing the text that could be fed to a computer.

That’s how computer code (i.e., the machine code) got abstracted and converted to text. But the conversion was not bidirectional. While programmers reaped big benefits from switching to a more intuitive way of working (i.e., writing text), computers did not benefit at all. Computers do not understand text. At least, they cannot understand text that is understandable to most engineers.

The question is, now that it became easier for human programmers to express their intentions and to consume the outputs produced by computers, is Test Driven Development still necessary?

Despite making improvements in the way programmers express their intentions by writing text, it is still not possible to verify if those intentions were expressed properly and if the computers are processing the information the way we expect them to. That problem stems from the fact that computers do not understand text. But also, the text that represents the source code often gets misread by humans as well.

Text also tends to be ambiguous

Computer programs, written as plain text (i.e., source code) differ significantly from natural human languages. In what ways?

Firstly, computer source code is lexically different from natural languages. Computer programming languages are composed of a severely limited vocabulary (some words are reserved as programming language keywords), while other words remain free-form in order to act as unique identifiers that help name the program constructs (e.g., variable names, method names, module names, etc.)

Secondly, computer source code is syntactically laid out and organized different than the way natural languages are. Formally defined structures play much more important role in computer programming languages than they play in natural languages. Furthermore, almost all programming languages feature multiple forms of indented layouts (horizontal and vertical). Such layout is seldom, if ever, encountered in written natural languages.

Thirdly, and most importantly, there is the difference in semantics: text written in a natural language is typically understood in two simultaneous (concurrent) phases. Those phases denote:

Text (how it is written down)

Domain (what does the text mean)

These two phases of simultaneous comprehension are insufficient when reading a computer program (code-as-text). To understand the computer source code, we need a third dimension: execution.

To discover the operational semantics of the program’s source code, we are required to trace the source code execution by reading the source code.

Easier said than done. Oftentimes, opening a file containing code-as-text does not give as an easy access to grasping what is that program supposed to be doing. Unlike with natural language written down as text, where we can safely start from the top of the page/screen and follow the story as it unfolds, row by row, paragraph by paragraph, computer source code does not necessarily proceed in that fashion.

The problem therefore lies in the fact that code-as-text cannot provide us with clear understanding of how the program will behave. We can only know for sure how the program works and what does the program execute if we execute it.

Why is that a problem?

Seeing how code-as-text is an intermediary layer between human programmer and the machine that runs the program, we observe that there is an unavoidable lag between the moment when we make a change (a diff) to the source code and the moment when we learn about the impact of the change we’ve made. This lag is the crux of the problem.

In many environments, it takes a considerable amount of time between us making a diff and seeing the effects of that diff. This inevitable slowdown is negatively affecting the quality of our work.

In contrast, almost any other crafting activity is free of this annoying lag. If we’re building a cabinet, for example, the material and the tools we’re using will inform us, instantaneously, about the effects of the change we’re making. We get feedback in real time, and that feedback is ensuring that we proceed without making serious mistakes.

None such real time feedback is available in the activity of software engineering. When writing software, we work with code-as-text, which is incapable of giving us real time feedback. Once we introduce some change into the source code, we have to do a lot of acrobatics before we can see the result of that change. This is considerably slowing us down and therefore poses a serious problem when it comes to ensuring quality of our delivery.

Is there a solution?

Currently the only mainstream solution that exists for addressing the above problem is Test Driven Development (TDD). When doing TDD we focus on executing our program as frequently as possible. The only way to gain insight and understanding about the effect of the changes we make to the source code is to execute the program. And because TDD is based on writing micro tests, the time lag between the moment when we make the change and the moment we execute the program and witness the effects of that change should ideally be as short as it can get.

Is there room for improvement?

Looks like TDD is a crutch that was necessary for addressing the issues created by the code-as-text solution. Seeing the low traction rate that TDD practice is infamous for, it is obvious that TDD is not, nor will it become, the optimal solution.

If even after decades of teaching and evangelizing the TDD practice we haven’t seen any significant uptake, we have no recourse but to conclude that there’s gotta be a better way. When it comes to code-as-text, it is very hard to envision a better solution than TDD. So, rather than treating a symptom (TDD), we should look into fixing the cause (code-as-text).

Hypothetically moving away from programming computers using free-form text would definitely remove the bottleneck described above. Yes, but how to progress from using text to tell computers what we’d like them to do?

The future of our profession looks exciting. Someone might already be working on a very successful replacement of code-as-text. Once that happens, it will revitalize our industry.