How To Implement Volatile Code In A Lean Fashion

One of the most important principles to follow when building software is to isolate the code that frequently changes from the code that…

How To Implement Volatile Code In A Lean Fashion

Photo by Alex Bunardzic

One of the most important principles to follow when building software is to isolate the code that frequently changes from the code that seldom changes. Failing to do that invariably results in code that is tightly coupled, which in turn produces software that’s anything but soft. In other words, failing to carefully design our code leads to quick hardening of the code, which leads to unmanageable mess from which it is very hard to recover.

Knowing the above, we must always strive toward separating those aspects of our code that are concerned with infrastructure from the aspects that are concerned with core business competencies. To put it more bluntly, we must identify aspects of our system that are commodities, meaning easy to get off-the-shelf. Once identified, those commodities must be kept isolated from the value-added functionality that is the result of focused team work and therefore cannot be purchased/obtained from a third party vendor, or from the open source community.

Volatile Code

What is typically the most volatile layer of our code? These will be entities/business rules that are driving the business operations and furthering the business strategic and tactical agendas. If we analyze, over time, how code on any given project changes, we will notice that in most cases the underlying infrastructure and the so-called delivery mechanisms, once defined, pretty much stay put. What tends to change most frequently are the business entities (i.e. different products, shipping options, taxation values, etc.), as well as business rules (volatile algorithms determining operational issues such as when to apply which discount/promotional offer to which products, customers, etc.)

Now that we have identified the most volatile aspect of our code, we must fully isolate it from the underlying commodities (i.e. computing infrastructure and also the delivery and persistence mechanisms). We do that by specifying that dependencies only move in one direction, namely from the delivery mechanisms/infrastructure, to the business entities and operational rules. The meaning of unidirectional dependencies is as follows: source code that deals with business entities and operational rules is not dependent, in any way, on the source code that is responsible for delivering events and associated values and for operating the underlying computing infrastructure.

For example, if one of the operational rules is to notify customers that their purchase payment has been successfully processed, the rule triggering that notification must not be dependent on the notification delivery mechanisms. Whether the specified notification is going to be delivered via an email, an RSS or Atom feed, an SMS message, etc., is of no concern to the code that triggers the notification rule. Or, to put is slightly differently, business entity such as customer is never aware of the delivery channel called email service, but that delivery channel is very much aware of the existence of an entity called customer (because the delivery channel will be responsible for managing customer’s name, email address, and other attributes necessary for successfully completing the delivery).

Different layers of our application evolve at a different pace, so it is of paramount importance to keep those layers separated and completely isolated from each other. Doing that enables us to make quick and nimble changes to our application code without disrupting other surrounding layers.

Example

Let’s take a simple example of maintaining core business entities, such as SKUs (Stock Keeping Units). Any retail business depends on SKUs, as these units are the bloodline of the business operations. To implement SKUs in our online retail operations (an e-commerce store), we must allocate a facility that hosts SKU definitions. This facility must be completely isolated from any surrounding delivery mechanisms as well as from any other auxiliary computing infrastructure (such as a database, for example, or a flat file storage).

However, we typically see that SKUs will get designed and implemented as rows of values that are stored in the database. This is far from desirable, but is a concession to the current state of the technology. In our design, we should strive to decouple our definition of SKUs from any peculiarities of some underlying delivery/storage mechanisms. So it is best to start defining our SKUs via specifications. For our current hands-on example, I have chosen the tried-and-tested rspec framework. This framework has been proven extremely beneficial because it allows us the declare our expectations using syntax that is very close to natural English language, and yet the specifications are immediately executable.

When it comes to SKUs, our expectations are that each SKU attribute (such as SKU name, product name, product code, quantity, etc.) is defined by supplying the correct value. Using the rspec framework, here is how we might declare expected values for our SKU (let’s call it “First SKU”):

The above specification (I prefer to call it ‘SKU blueprint’) declares what is it that it describes (it says describe “First SKU” on line 3). The next thing our blueprint does is set the precondition by loading the SKU values in the before section (lines 5–7). This precondition is important because it prepares the ground for the specification to execute.

The remaining body of the blueprint is concerned with setting the context (“First SKU values”, line 9), and then describing the expected values for all four SKU attributes. The way to read those lines (i.e. lines 9–31) is to note that the blueprint expects to find a specifically declared value for each SKU attribute. For example, when it comes to product name for the “First SKU”, the blueprint expects to find the value ‘Raincoat’. And so on.

Another important thing to note about the rspec blueprints is that they are, as we’ve already mentioned, fully executable. A script like the one above can be executed from the command line by simply typing rspec and hitting the enter key. Once the blueprint gets executed, it will report back whether the expectations declared inside the blueprint have been met or not.

Naturally, in our case, the expectations are not going to be met for the simple reason that we haven’t written even a single line of implementation code in the FirstSKU file (which was mentioned on the line 6 in the specification code above). Our first order of business, then, is to create that file and add some code to it. As can be seen from the declared expectations on line 6, we are specifying/expecting that this code (FirstSKU) is capable of returning some values.

Once we implement the FirstSKU by enabling it to return values, we can re-run our blueprint by typing rspec after which we can examine the outcome of the executed code.

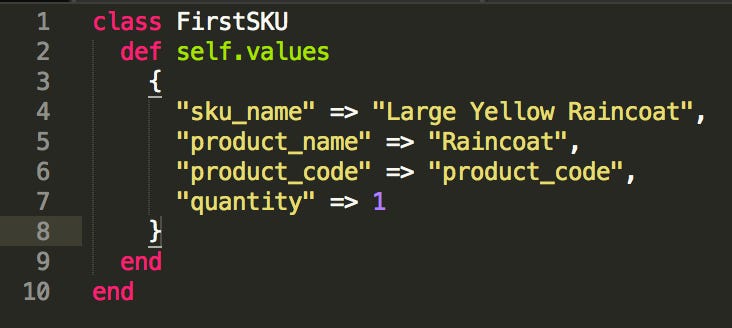

What would be the best way to implement the FirstSKU? Like with any other implementation, there is many ways to skin a cat. I prefer to keep things extremely simple, so I’d implement is at a construct that holds expected values (save the implementation code below in the first_sku.rb file):

Note how class FirstSKU is what we call a PORO — plain old Ruby object. This plain old object has no dependencies on any other objects, facilities, delivery channels or persistence mechanisms (notice how it does not inherit from any other class, nor does it contain any other object). That’s very advantageous, as it allows us to modify it and move it around without breaking anything. Most importantly, we can test it in isolation. This arrangement also enables us to troubleshoot any problems by simply examining the values without having to rely on any operational interface.

The advantage of writing the blueprint before implementing the executable code is that the specification of our expectations now places constraints on the design of the implemented code. Since no specifics regarding the storage of the values has been mentioned in our blueprint, we are free to implement the executable code by merely providing an object that holds those values. That way, we are deferring the actual underlying technical implementation by treating it as an annoying detail. Yes, the declared values will get persisted eventually, but how are they going to get persistent is irrelevant for our present intention. This approach keeps things nicely compartmentalized and separated, allowing us to produce a much coveted plug-and-play software architecture.

We may initially implement the persistence using a relational database, but then later on decide to switch to using a No SQL solution (such as CouchDB, or DocumentDB, for example). We will be free to do so because our specification is not married to any of those underlying details, therefore freeing us to leverage any technology that we may find appealing.

So how would we go about implementing this last step, i.e. choosing the underlying persistence mechanism for our SKUs? If we decide to leverage relational database, such as MySQL for example, we’d declare our intention in the config/database.yml file of our Rails project. Once our application is connected to the underlying relational database, we will repopulate (or, seed) its SKUs by writing a simple db/seeds.rb script:

The above snippet illustrates the seeding script where we are passing three SKUs with their declared values. This script then gets executed by typing rake db:seed on the command line and pressing the Enter key. The rake utility will then connect to the database that was declared in the config/database.yml file, and will then load SKU values as declared in the db/seeds.rb file.

Conclusion

We have chosen a fairly volatile aspect of a typical business application, such as frequently changing SKUs, and followed the architecturally sound approach to implement it. First we’ve decided to keep the intention (i.e. the declared expectations) separate from the implementation (i.e. the underlying technology as a delivery channel). Keeping those two separate (i.e. compartmentalized and isolated) lets us separate aspects of our application that are quickly changing from the aspects that are more stable, slower to change. For example, while SKUs tend to change rather quickly (we keep adding new products and removing/inactivating some of the old ones), the decision on how to store those SKUs is much less volatile (e.g. if we initially choose to use relational database, such as MySQL, that decision may change if we realize that using a different database makes more sense, such as upgrading to PostgreSQL; or we may even realize that migrating to a No SQL persistence layer, such as MongoDB or Cassandra makes more sense).

To illustrate this architectural principle, we have chosen to use the rspec framework, it being one of the most prominent and mainstream frameworks for declaring our expectations. The advantage of that approach is that we end up with immediately executable expectations (we also call it our ‘business blueprint’). Once we have executable blueprints, they will guide our approach to implementing production code. One desirable side effect of this approach is that we most likely end up with a fairly lean production code that is easy to maintain.